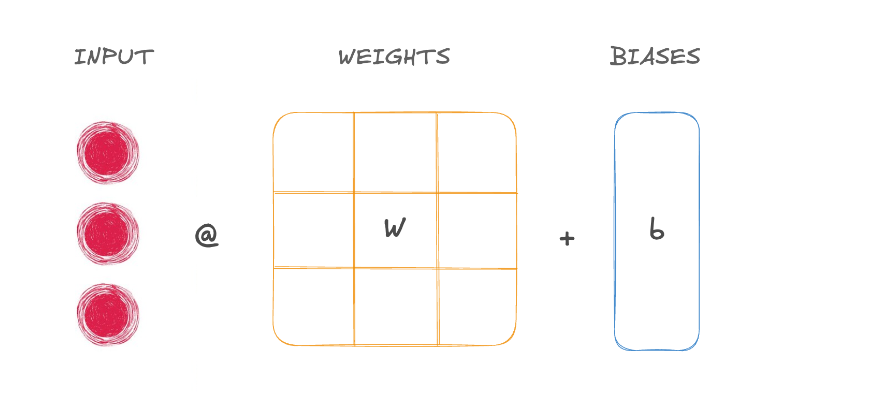

Linear Functions

import torch.nn as nn

input_tensor = torch.tensor([[0.3471, 0.4547, -0.2356]])

Pass it to linear layer to apply a linear function

linear_layer = nn.Linear(in_features=3,out_features=2)

output = linear_layer(input_tensor)

Each layer has a .weight and .bias propert

linear_layer.weight & linear_layer.bias

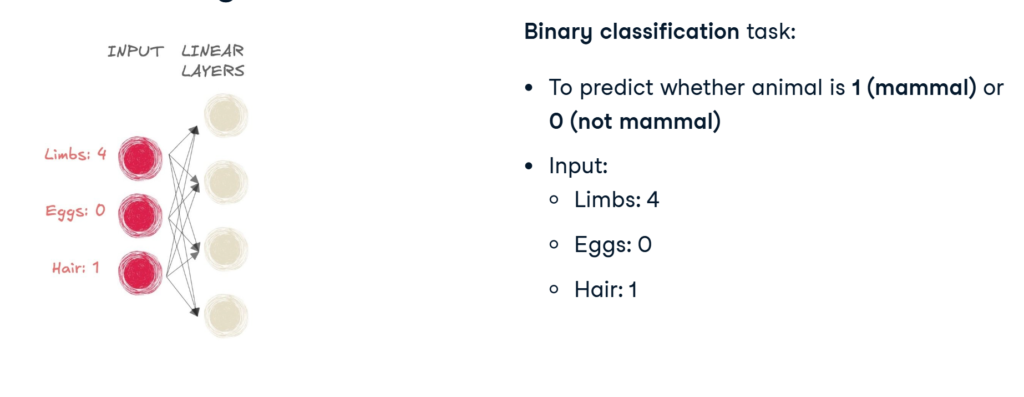

Networks with only linear layers are called fully connected.

Linear layers have connections (or arrows) between each input and output neuron, making them fully connected.

To stack multiple layers use nn.Sequential()

model = nn.Sequential(

nn.Linear(10, 18),

nn.Linear(18, 20),

nn.Linear(20, 5)

)

Input 10 -> output 18 -> output 20 -> output 5

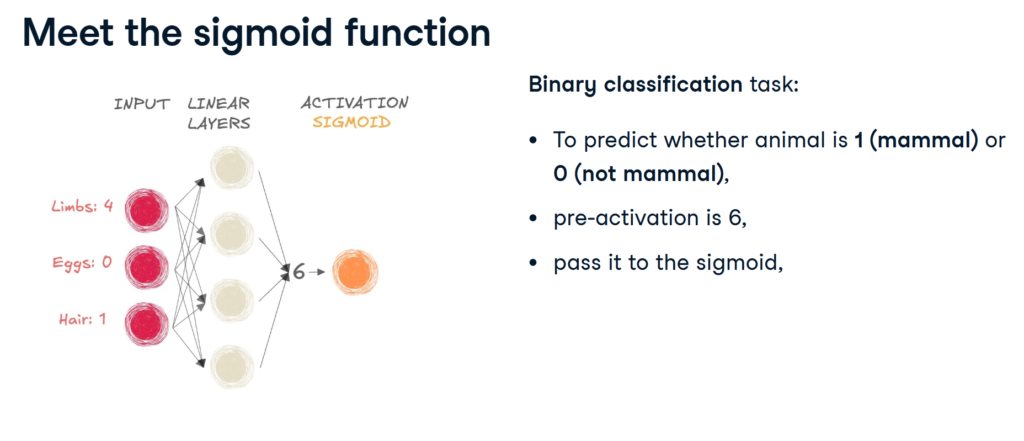

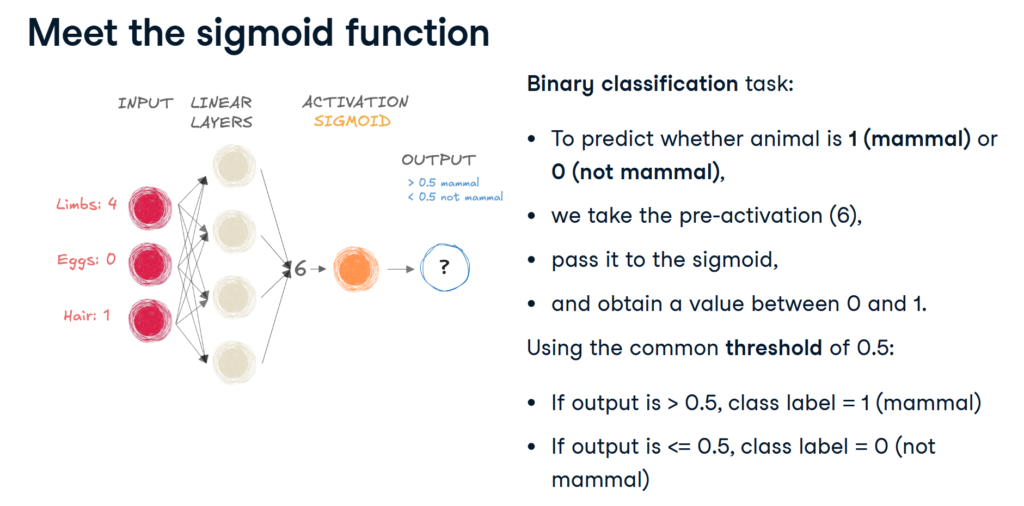

Activation functions (non-linear)

Activation functions add non-linearity to the network

Sigmoid function (usualy used for binary classification)

sigmoid = nn.Sigmoid()

nn.Linear(6,4)

nn.Linear(4,1)

output = sigmoid(input_tensor)

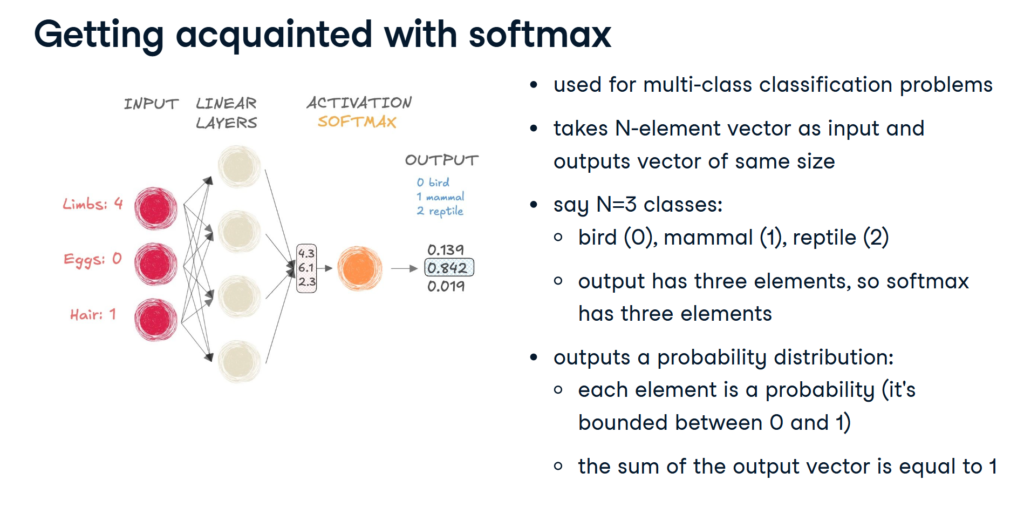

Softmax function (usualy used for multi-class classification)

probabilities = nn.Softmax(dim=-1)

output_tensor = probabilities(input_tensor)